Bi-ACT: Bilateral Control-Based Imitation Learning via Action Chunking with Transformer

The University of Osaka

Bi-ACT

Bi-ACT Model

Bi-ACT Model

Bi-ACT Model

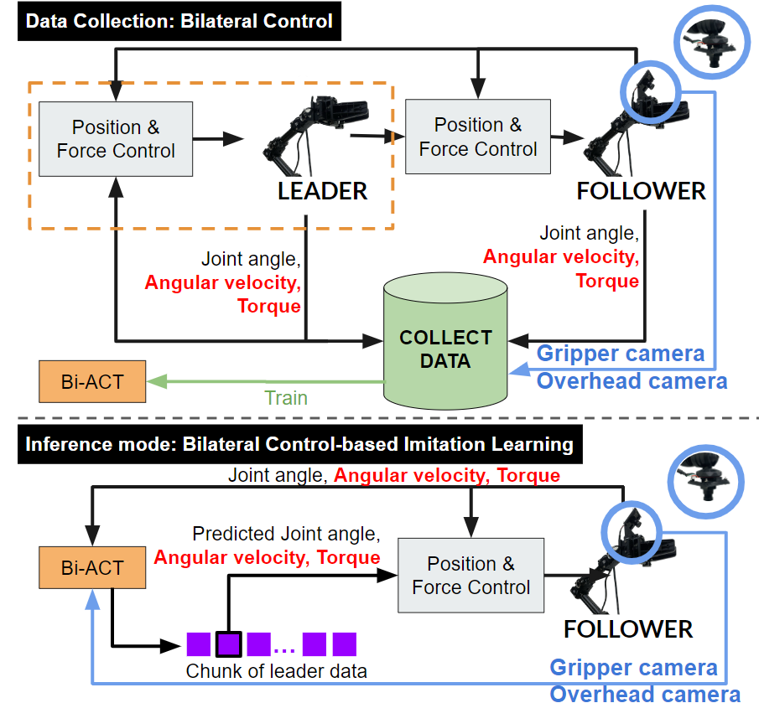

From the architecture, it is evident that the model receives inputs as two RGB images, each captured at a resolution of 360 x 640, one from the follower’s gripper and the other from an overhead perspective. In addition, the model processes the current follower’s joint data, which consists of three types of data (angle, angular velocity, and torque) across five joints, forming a 15-dimensional vector in total. Utilizing action chunking, the policy generates an $k$ x 15 tensor, representing the leader’s next actions over $k$ time steps. The leader’s actions for these time steps are then conveyed to the controller, which determines the required current for the joints in the follower robot, enabling it to move in the specified direction.

Experiments

Experimental Environment

Data Collection

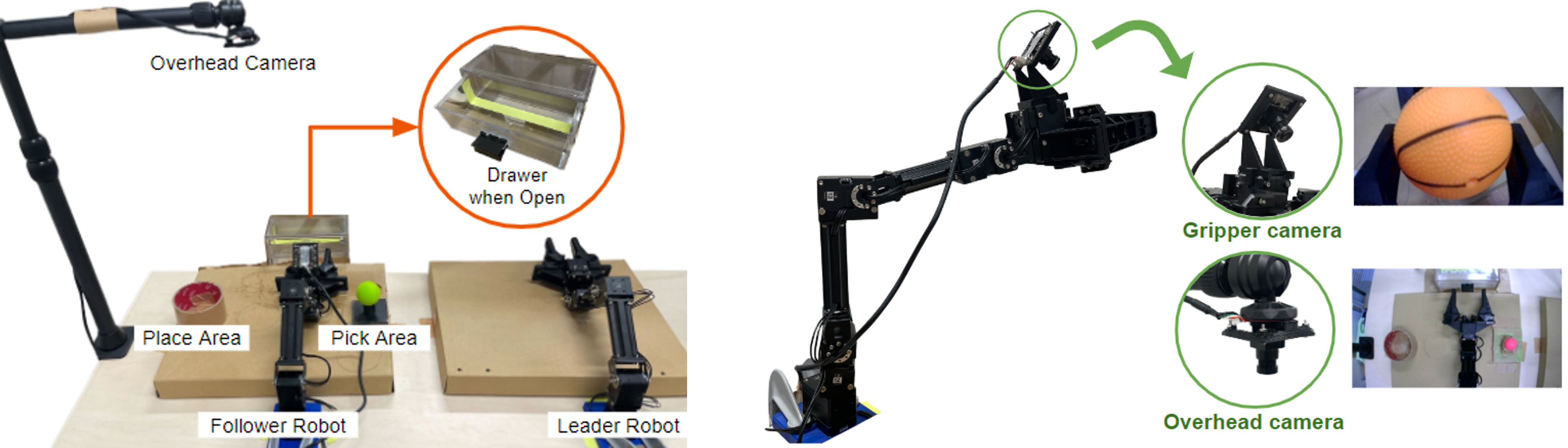

In the environment setup, two robotic units designated as the leader and the follower, were positioned adjacent to each other as delineated. The experimental environment was arranged on the side of the follower robot, which was the designated site for task execution.

Task

Data Collection

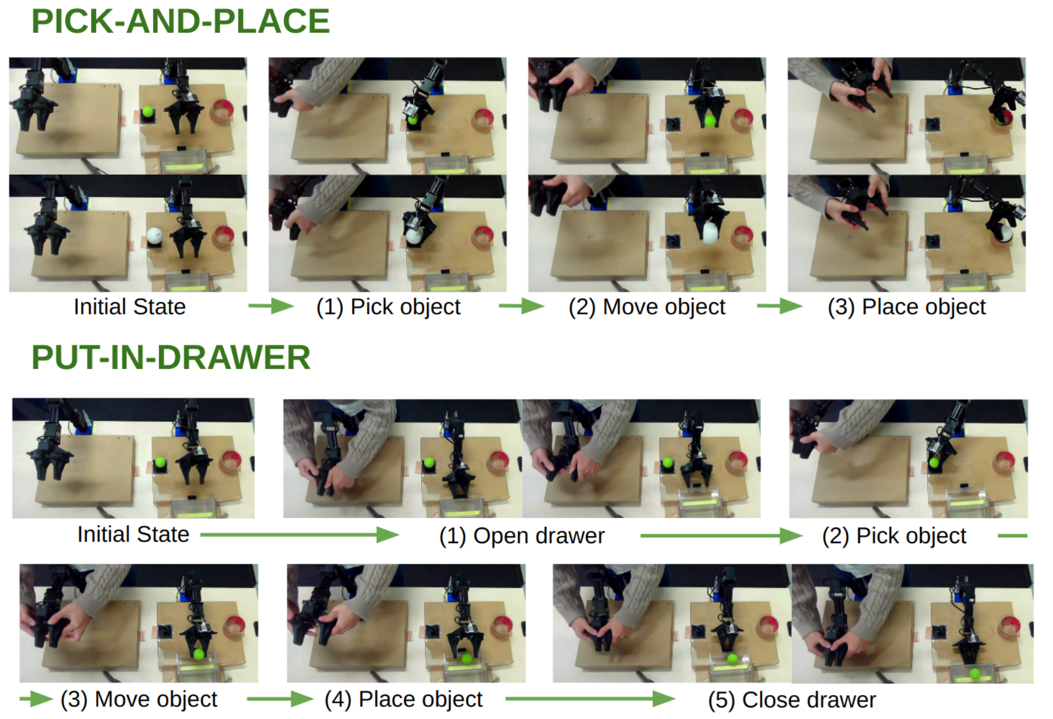

For the initial task of ‘Pick-and-Place’, the objective was for the gripper to accurately pick up objects of various shapes, weights, and textures from the pick area and then place them within the place area.

The second task was ‘Put-in-Drawer’, which involved moving an object from the pick area to the drawer.

Objects for Pick and Place

Objects

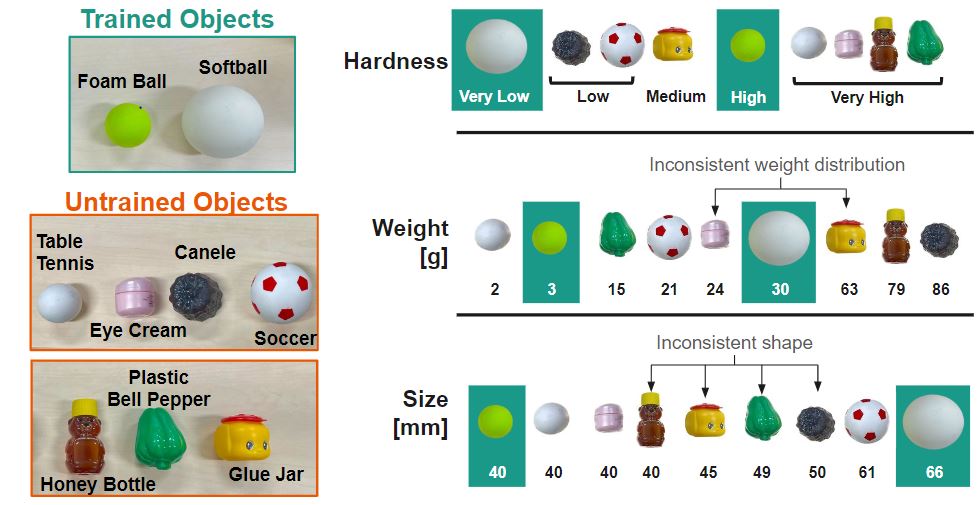

We used a foam ball and a softball during the data collection phase. In testing the model, these two objects, along with seven untrained objects - a table tennis ball, an eye-cream package, Canele, a soccer ball, a plastic bell pepper, a honey bottle and a glue jar - were used.

Data Collection (Teleoperation: Bilateral Control)

We collected joint angles, angular velocities, and torques data for a Leader-Follower robot’s demonstration using a bilateral control system. The robot was controlled at a frequency of 1000Hz. Additionally, both the onboard hand RGB camera and the top RGB camera on the environmental side of the robot were operating at approximately 200Hz. To align both sets of data with the system’s operating cycle, we adjusted the data to 100Hz for use as training data. This was done because the model’s inference cycle is approximately 100Hz.

Data Collection of Foamball (Real-Time: 1X)

Data Collection of Soft Tennis Ball (Real-Time: 1X)

Data Collection of Put in Drawer (Real-Time: 1X)

Results (Autonomous)

Pick and Place (Real-Time: 1X)

Put in Drawer (Real-Time: 1X)

Citation

@INPROCEEDINGS{10637173,

author={Buamanee, Thanpimon and Kobayashi, Masato and Uranishi, Yuki and Takemura, Haruo},

booktitle={2024 IEEE International Conference on Advanced Intelligent Mechatronics (AIM)},

title={Bi-ACT: Bilateral Control-Based Imitation Learning via Action Chunking with Transformer},

year={2024},

volume={},

number={},

pages={410-415},

doi={10.1109/AIM55361.2024.10637173}}

Contact

Masato Kobayashi (Assistant Professor, The University of Osaka, Japan)

- X (Twitter)

- English : https://twitter.com/MeRTcookingEN

- Japanese : https://twitter.com/MeRTcooking

- Linkedin https://www.linkedin.com/in/kobayashi-masato-robot/